Overview

Bonded networked interfaces allow you to either aggregate the interfaces for increased bandwidth or create higher network availability for your server. This step-by-step tutorial will focus on how to create a fault tolerant network bond using two interfaces in an active/passive configuration. Fault tolerant bonds are used to ensure a server is still available on the network if a network interface goes down. You will likely see this on critical servers, such as database servers and e-mail servers, that must maintain a high level of availability, where other applications or services rely on them being online.

Prerequisites

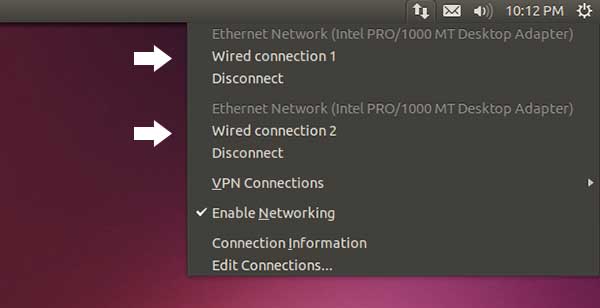

- Two or more network interface cards.

- The MAC address (Hardware Address) of each network interface card. This can be found printed on the physical card or in a virtual server’s network hardware settings.

- All interfaces connected to the same network subnet.

Lab Server Configuration

To make it easier for you to follow along, our server will have the following configurations.

| Network Interface | Interface Name | MAC Address |

|---|---|---|

| NIC #1 | eth0 | 00:0c:29:58:65:cc |

| NIC #2 | eth1 | 00:0c:29:58:65:d6 |

And when the network bond is created, the server will be assigned the following network settings:

| IP Address | Subnet | Gateway | DNS Server |

|---|---|---|---|

| 172.30.0.77 | 255.255.255.0 | 172.30.0.1 | 172.30.0.5 |

Enabling NIC Bonding

- Out of the box, Debian will not be able to bond network interfaces. You need to install the bonding kernel module by installing the ifenslave package.

apt-get install ifenslave

- To enable the newly installed module, stop the network service.

service networking stop

- Now enable the bonding kernel module.

modprobe bonding

Creating the Network Interfaces

- Open the network interface configuration file into a text editor, like Nano.

sudo nano /etc/network/interfaces

- To create a fault-tolerance bond, which we’ll call bond0, that uses interface 1 (eth0) and interface 2 (eth1) add the following lines.

# Network bond for eth0 and eth1 auto bond0 iface bond0 inet static address 172.30.0.77 netmask 255.255.255.0 gateway 172.30.0.1 bond-mode 1 bond-miimon 100 slaves eth0 eth1

- Add the following lines to configure interface 0 (eth0) as a slave for bond0. It’s very important to assign the interface the MAC address of the NIC you expect it to use. Without, you may not know which NIC the interface eth0 is assigned to, which is crucial when doing maintenance.

# eth0 - the first network interface auto eth0 iface inet manual hwaddress ether 00:0c:29:58:65:cc bond-master bond0

- Add the following lines to configure interface 1 (eth1) as a slave for bond0. Just as we did for eth0, remember to add the MAC address.

# eth1 - the second network interface auto eth1 iface inet manual hwaddress ether 00:0c:29:58:65:d6 bond-master bond0

- The configuration file should now look similar to the following example. Remember to modify the highlighted values to match your environment.

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). # The loopback network interface auto lo iface lo inet loopback # The first network bond auto bond0 iface bond0 inet static address 172.30.0.77 netmask 255.255.255.0 gateway 172.30.0.1 bond-mode 1 bond-miimon 100 slaves eth0 eth1 #eth0 - the first network interface auto eth0 iface eth0 inet manual hwaddress ether 00:0c:29:58:65:cc bond-master bond0 #eth1 - the second network interface auto eth1 iface eth1 inet manual hwaddress ether 00:0c:29:58:65:d6 bond-master bond0

- Save your changes and exit the text editor.

- Bring the network services back online.

sudo start networking

3. Validating the Connection

Now that the bond is created we need to test it to ensure it is functioning properly.

- Run the following command to check the bond.

cat /proc/network/bonding/bond0

- If successful, the output should look similar to this:

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth1 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:29:58:65:d6 Slave queue ID: 0 Slave Interface: eth0 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:29:58:65:cc Slave queue ID: 0

- Now ping another network node or the local gateway to test connectivity. I’ll use the gateway IP mentioned earlier as an example.

ping 172.30.0.1

- If successful, you should see the following output:

PING 172.30.0.1 (172.30.0.1) 56(84) bytes of data. 64 bytes from 172.30.0.1: icmp_req=1 ttl=64 time=0.340 ms 64 bytes from 172.30.0.1: icmp_req=2 ttl=64 time=0.194 ms 64 bytes from 172.30.0.1: icmp_req=3 ttl=64 time=0.153 ms 64 bytes from 172.30.0.1: icmp_req=4 ttl=64 time=0.343 ms 64 bytes from 172.30.0.1: icmp_req=5 ttl=64 time=0.488 ms --- 172.30.0.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 3998ms rtt min/avg/max/mdev = 0.153/0.303/0.488/0.121 ms