Overview

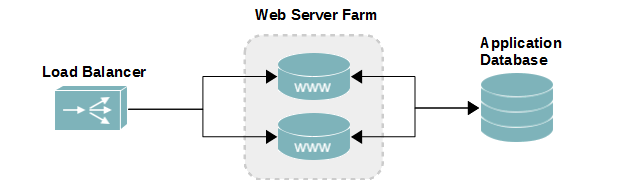

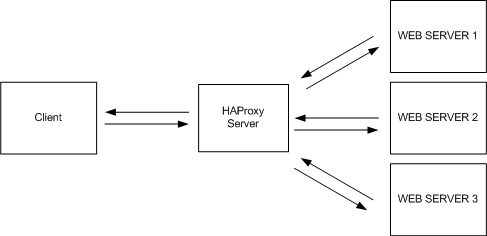

Nginx, the web server, is a fantasicly simple and inexpensive frontend load balancer for web applications – large and small. It’s ability to handle high loads of concurancy and simply forwarding settings make it an excellent choice.

Although it doesn’t have the bells and whistles of enterprise solutions from Citrix or F5, it is very capable of doing the job, and doing it very well. The biggest down fall, depending on your’s or your team’s skillset, is it won’t have a friendly GUI to guide you. The configurations will have to be done in the Nginx configuration files using a text editor.

Don’t let that stop you from deploying Nginx. Many startups and relatively large technology companies rely on Nginx for load balancing their web applications.

Objectives

For those following along, this tutorial will have the following objectives

- Deploy an Nginx server on CentOS 6

- Load balance 3 Apache web servers

- Web server 1 and 2 are new, powerful servers and should receive most of the connections.

- Web server 3 is old and should not receive too many connections.

- Connections should be persistent. This is required to ensure users remain on the same server when they log in, as session information isn’t replicated to other servers.

Server Configuration

This lab used for this tutorial had the following configuration. However, the tutorial will be limited to covering Nginx on SLLOADBAL01. For creating web servers on CentOS 6, see CentOS 6 Web Server Architecture, Part I.

| Internal Hostname | OS | Role | IP Address |

|---|---|---|---|

| slloadbal01.serverlab.intra | CentOS 6.5 | Nginx Load Balancer | 172.30.0.35 |

| slwebapp01.serverlab.intra | CentOS 6.5 | Apache Web Server | 172.30.0.50 |

| slwebapp02.serverlab.intra | CentOS 6.5 | Apache Web Server | 172.30.0.51 |

| slwebapp03.serverlab.intra | CentOS 6.5 | Apache Web Server | 172.30.0.52 |

Application Configuration

Each web application server’s IP address will be assigned the same public hostname, in addition to their real hostnames as listed above. They will each have WordPress installed, with exact same configuration and content.

| Website Hostname | Application | Database Server |

|---|---|---|

| www.serverlab.ca | WordPress | webdb01.serverlab.intra |

Listing the database isn’t really that relevant to this tutorial, other than to illustrate that the WordPress database is not hosted on any of the web servers.

Installing Nginx

- Create a YUM repo file for Nginx.

vi /etc/yum.repos.d/nginx.repo

- Add the following lines to it.

[nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/$releasever/$basearch/ gpgcheck=0 enabled=1

- Save the file and exit the text editor.

- Install Nginx.

yum install nginx

Configure Nginx

- Open the default site configuration file into a text editor.

vi /etc/nginx/conf.d/default.conf

- Add the upstream module to the top of the configuration file. The name backend can be replaced with a name of your choosing. All three backend servers are defined by their internal DNS hostnames. You may use IP addresses instead.

upstream website1 { server slwebapp01.serverlab.intra; server slwebapp02.serverlab.intra; server slwebapp03.serverlab.intra; } - Assign weight values to the servers. The lower the value, the more traffic the server will receive relative to the other servers. Both slwebapp01 and slwebapp02 will be assigned a weigh value of 1 to spread load evenly between them. Slwebapp03 will, however, be assigned a higher weight of 5 to minimize its load. It will receive every 7th (1+1+5) connection.

upstream website1 { server slwebapp01.serverlab.intra weight=1; server slwebapp02.serverlab.intra weight=1; server slwebapp03.serverlab.intra weight=5; } - We need our users logged into the WordPress CMS to always connect to the same server. If they don’t, the will be shuffled around the servers and constantly having to log in. We use the hash directive to force users to always communicate with the same server.

upstream website1 { ip_hash; server slwebapp01.serverlab.intra weight=1; server slwebapp02.serverlab.intra weight=1; server slwebapp03.serverlab.intra weight=5; } - Now we configure the server directive to listen for incoming connections, and then forward them to one of the backend servers. Below the upstream directive, configure the server directive.

server { listen 80; # Listen on the external interface server_name www.serverlab.ca; location / { proxy_pass http://website1; } } - Save the configuration file and exit the text editor.

- Reload the default configuration into Nginx.

service nginx reload

Additional Options and Directives

Marking a Server as Down (offline)

You may need to bring one of the servers down for emergency maintenance. And you want to be able to do this without impacting your users. The Down directive will allow you to do this.

upstream website1 {

ip_hash;

server slwebapp01.serverlab.intra weight=1 down;

server slwebapp02.serverlab.intra weight=1;

server slwebapp03.serverlab.intra weight=5;

}

Health Checks

Enable health checks to automatically check the health of each server in an upstream group. By default, each server is checked every 5 seconds by sending an http connection. If the server doesn’t return a 2XX or 3XX status, it is flagged as unhealthy and will no longer have connections forwarded to it.

upstream website1 {

server slwebapp01.serverlab.intra;

server slwebapp02.serverlab.intra;

server slwebapp03.serverlab.intra;

health_check;

}

Upstream Server Ports

Unless a port is specificed, all requests will be forwarded to port 80. If your backend web servers are hosting the application on another port, you may specify it at the end of the server name/ip address.

upstream website1 {

server slwebapp01.serverlab.intra:8080;

server slwebapp02.serverlab.intra:8080;

server slwebapp03.serverlab.intra:9000;

}

Backup Servers

You have a requirement for having a server as a hot backup for when a node unexpectedly goes down. The backup server will only handle traffic when a node goes down, and will remain idle when all nodes are healthy.

upstream website1 {

server slwebapp01.serverlab.intra;

server slwebapp02.serverlab.intra;

server slwebapp03.serverlab.intra;

server slwebbkup01.serverlab.intra backup;

}

More Options and Directives

There are so many different directives to manage Nginx load balancers that it doesn’t make sense to list them all here. I’ve kept is short to highlight popular options. I do recommend that you read through the Upstream documenation for a complete list of capabilities.

Conclusion

You now have a functional load balancer for your website, spreading load amongst three nodes. It may not have all of the bells and whistles of enterprise balancers, but it is very fast and very efficient, and it can balance connections with minimal hardware resources. It certain gives typical hardware load balancers a run for their money, which is why it is used by large Internet web sites all over the world.

If you do need a simple and lightning fast balancer for a web application, I recommend diffinitly recommend using Nginx.