Overview

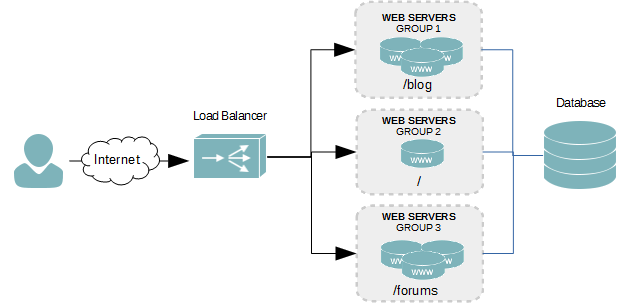

Layer 4 load balancing is the most simplistic method of balancing traffic over a network across multiple servers. The simplicity of it means lightning fast balancing with minimal hardware. However, with that simplicity comes limitations. The biggest knock against this method of balancing is every web server must host the exact same content; otherwise, the user experience will change for every user and every time someone accesses your content.

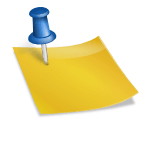

The figure below visualizes how a web application is balanced using layer 4. As an incoming request for your domain comes in, it lands on your balancer first. The load balancer’s job is to then forward the request to one of the web servers in the backend. If your application stores content in a database, as depicted in the figure, each web server must connect to the same database.

After the request is processed by whichever web server the user is forwarded to, that server will then respond directly to the user. However, because the load balancer always receives the incoming requests, the user’s next HTTP request may land on a different backend web server. Normally, this isn’t an issue, unless your application allows user logins. This can be solved by creating persistent connections using cookies, which tells the load balancer which backend server you need your requests to go to.

Of course, not all web applications require anything more than layer 4 balancing. For example, a blog with heavy traffic probably doesn’t need anything more. Another example would be a simple application you’ve developed and published with its own registered domain name.

Follow along with this tutorial to learn how to create simple layer 4 balancers for your application.

Prerequisites

This tutorial assumes you’ve already deployed HAProxy onto a separate server. If you have not, read Deploying an HAProxy Load Balancer on CentOS 6.

Create a Floating IP Address

A floating IP address is a term used by most load balancers. It’s essentially an additional IP address added to a physical network interface. This isn’t absolutely necessary, as you can use the IP address of the load balancer instead. However, when you are balancing multiple different applications, it becomes a requirement.

- Create a new network interface by duplicating an existing one, and adding a colon (:) and a value of 0 to the end of the file name. We’re going to add the floating IP to our first interface in this tutorial.

cp /etc/sysconfig/network-scripts/ifcfg-eth0 /etc/sysconfig/network-scripts/ifcfg-eth0:0

- Open the new file into a text editor.

- Modify the interface’s name to change it from eth0 to eth0:0, as shown in the example below. Of course, if you were adding the floating IP to your second network interface, eth1, the name would instead by eth1:0. If we were adding a second floating IP address, we would increment the last value by one, making it eth1:1.

DEVICE=eth0:0 TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=none IPADDR=172.30.0.30 PREFIX=24 IPV4_FAILURE_FATAL=yes IPV6INIT=no NAME="System eth0:0"

- Save your changes and exit the text editor.

- Restart the network services to initialize the floating IP.

services network restart

- Alternatively, you could just bring the physical interface down and then up again.

ifdown eth0

ifup eth0

- Verify that the new interface is online and assigned the floating IP by using the ifconfig command. The output should look similar to the example below.

eth0 Link encap:Ethernet HWaddr 00:0C:29:E9:C0:05 inet addr:172.30.0.25 Bcast:172.30.0.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fee9:c005/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:27 errors:0 dropped:0 overruns:0 frame:0 TX packets:32 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:2316 (2.2 KiB) TX bytes:3244 (3.1 KiB) eth0:0 Link encap:Ethernet HWaddr 00:0C:29:E9:C0:05 inet addr:172.30.0.30 Bcast:172.30.0.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Create a Virtual Server

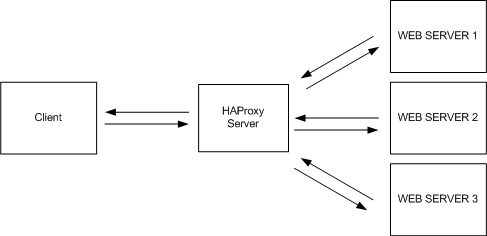

A virtual server in the context of a load balancer is an IP address used to listen for incoming connections for a load-balanced cluster. Typical, a floating IP is assigned to the virtual server. When a connection is received by the virtual server, it is then forwarded to the backend servers – the servers in a load balance cluster or group. We define our virtual servers by adding a frontend context below the global context of the HAProxy configuration file.

- Open the HAProxy configuration file in a text editor. The default location in CentOS is /etc/haproxy/haproxy.cfg.

- Create a virtual server and assign the floating IP address to it. We’ll call our virtual server webapp1.

frontend webapp1 bind 172.30.0.30:80 default_backend webapp1-serversbind The IP address and port the virtual server will listen for incoming connections on. This can be the IP address of a physical network interface on the load balancer, a floating IP address, or a star (*). Using a * means it will listen on all network interfaces. default_backend The name of the server group requests will be forwarded to.

Backend Server Cluster

We’re now going to configure the backend cluster for our virtual server. This is where is we place the information about our real servers – the ones actually hosting our content. The backend cluster is given the same name defined by the default_backend option of the frontend context.

- Create the backend server group for the virtual server webapp1.

backend webapp1-servers

- Define the load balancing algorithm. We’ll use Round-robin.

backend webapp1-servers balance roundrobin - Set the mode to http.

backend webapp1-servers balance roundrobin mode tcpmode Set the running mode or protocol of the instance. Available values are: tcp, http, and health. Tcp is used for layer 4 balancing, whereas http is for layer 7. - Add the real servers that are hosting the web application. We’ll add three in our example. Each one is hosting the application on port 80.

backend webapp1-servers balance roundrobin mode tcp server webserver1 192.168.1.200:80 server webserver2 192.168.1.201:80 server webserver3 192.168.1.202:80 - Save your changes to the haproxy configuration file.

- If HAproxy is running, reload the configuration file.

service haproxy restart

- If HAproxy is not running, start HAProxy.

service haproxy start

Balancing Algorithms

round-robin

Each server is used in turns, according to their weights. This is the smoothest and fairest algorithm when the server’s processing time remains equally distributed. This algorithm is dynamic, which means that server weights may be adjusted on the fly for slow starts for instance.

leastconn

The server with the lowest number of connections receives the connection. Round-robin is performed within groups of servers of the same load to ensure that all servers will be used. The use of this algorithm is recommended where very long sessions are expected, such as LDAP, SQL, TSE, etc… but is not very well suited for protocols using short sessions such as HTTP. This algorithm is dynamic, which means that server weights may be adjusted on the fly for slow starts for instance.

source

The source IP address is hashed and divided by the total weight of the running servers to designate which server will receive the request. This ensures that the same client IP address will always reach the same server as long as no server goes down or up. If the hash result changes due to the number of running servers changing, many clients will be directed to a different server. This algorithm is generally used in TCP mode where no cookie may be inserted. It may also be used on the Internet to provide a best-effort stickiness to clients which refuse session cookies. This algorithm is static, which means that changing a server’s weight on the fly will have no effect.

uri

The left part of the URI (before the question mark) is hashed and divided by the total weight of the running servers. The result designates which server will receive the request. This ensures that the same URI will always be directed to the same server as long as no server goes up or down. This is used with proxy caches and anti-virus proxies in order to maximize the cache hit rate. Note that this algorithm may only be used in an HTTP backend. This algorithm is static, which means that changing a server’s weight on the fly will have no effect.

url_param

Similar to uri, except the URL parameters are also used in the hash. This is ideal for permalink-type URLs used by WordPress and other content management systems.

Health Checks

When a server in our load balancing cluster becomes unavailable, we do not want traffic to be forwarded to it. Health checks are a way of automatically discovering servers that stop responding. Health checks are enabled by adding the check option to a server in the backend, as seen in the example below.

backend webapp1-servers

balance roundrobin

mode tcp

server webserver1 192.168.1.200:80 check

server webserver2 192.168.1.201:80 check

server webserver3 192.168.1.202:80 check

Server Maintenance

Every server requires some form of maintenance at some point in its life. To bring a server offline for maintenance and to prevent traffic from being forwarded to it while it’s offline, we can use the disabled option.

backend webapp1-servers

balance roundrobin

mode tcp

server webserver1 192.168.1.200:80 check

server webserver2 192.168.1.201:80 check

server webserver3 192.168.1.202:80 check disabled

Conclusion

Our layer 4 load balancer is now configured. As your application grows in popularity, you can simply add additional nodes to the backend cluster.

Tutorials in this series: